Program Evaluation for Public Health

The CDC's Introduction to Program Evaluation for Public Health Programs defines program evaluation as:

The systemic collection of information about the activities, characteristics and outcomes of programs to make judgments about the program, improve program effectiveness, and/or inform decisions about future program development

As such, program evaluation is set apart from ongoing, perhaps informal assessments that may occur during a program's lefespan. It is more formal in its intention and adheres to a set of guidelines.

While public health evaluation is a complex and varied field, all evaluations are targeted at answering several basic questions:

- Is the program meeting its objectives

- Through the originally intended process

- Why or why not?

Throughout this module we will be reflecting on a real world evaluation conducted with the support of the CDC. Below is some background on the program and the context for the evaluation of Health Bucks. You will see that their initial goals are similar to the basic questions inherent in any evaluation.

Introduction to Health Bucks

Communities are continually seeking ways to reduce the impact of food insecurity and improve nutrition for residents. In 2005, the New York City Department of Health and Mental Hygiene's District Public Health offices introduced a pioneering community-based program called Health Bucks to the Bronx, Brooklyn and Manhattan.

This food access initiative centered around coupons distributed to low-income New Yorkers via community-based organizations. These coupons could then be used at any of eleven participating markets during the annual farmers market season—July to November.

After the initial program, Health Bucks was expanded in 2006. Now those receiving SNAP benefits could use electronic benefit transfer (EBT) wireless terminals installed at farmers markets to purchase fruits and vegetables without the need of physical coupons distributed via the local community organizations.

The new program features also added additional financial incentive. SNAP recipients at certain markets could receive a two-dollar coupon for every five dollars of EBT credits they spent at the market.

The goal of Health Bucks was to increase access to fresh fruits and vegetables, by providing financial incentives for low-income New Yorkers to shop at farmers markets with a focus on the city's high poverty neighborhoods.

Context for the Evaluation:

Key stakeholders, such as the CDC, were interested in exploring the effectiveness of the program and feasibility for expansion. The CDC was responding to a strong need for implementation of evidence-based programs, particularly at the community level. In performing a full evaluation of the Health Bucks program, the hope was to...

- Assess program impact on access, purchasing, and consumption of fresh fruits and vegetables among low-income residents eligible for Health Bucks

- Identify drivers and barriers surrounding program implementation and outcomes, particularly how they may have varied by site/location.

- Explore potential areas for improvement

- Assess if and how this program could be expanded to other communities facing similar challenge

Where Do We Begin?

Now that we have a general outline for what an evaluation seeks to achieve, let's look at the different types or levels of program evaluation that may be used to meet our evaluation needs:

Types of Program Evaluation

Formative: To "test" various aspects of the program. Does it make sense and is it applicable? Typically takes place prior to program implementation or early on in the process

- The goal is to provide feedback on strengths and areas of improvement for the program, or program materials, as well as explore overall applicability and feasibility of the project.

- Examples:

- Focus Groups with target audience for a program that is in development at a local community center

- A brief "pen and paper" survey of participants in a pilot educational program on sexual health for teens to understand what program features they liked and what could be improved.

- One-on-one interviews with current users of a prescription drug to review an informational brochure on medication compliance for content, applicability and ability to produce a call to action

Process: Explore and describe actual program implementation. It can indicate whether the program was implemented with fidelity, as intended. It may help explain why a program did or did not meet its main objectives.

- The goal is to examine how the program was implemented

- Examples:

- Assess what percentage of parents in an online course surrounding talking to your teen about drugs, fully completed the online education module.

- Interview program coordinators surrounding internal procedures for training healthcare providers on new guidelines for a hospital-based screening program targeted at identifying the early warning signs of psychosis in their patient population.

Outcome/Impact: Assess the main program objective (or objectives) to determine how the program actually performs. Was the program effective, did it meet the objective(s)?

- The goal is to determine whether outcomes observed are due to the program

- Examples:

- Randomized control trial example: For a new peer mentor program at a local health center to increase percentage of Tb patients who are compliant with their medication regimens, randomly half were selected to receive a new program with one-on-one peer mentoring while the other half received the standard discussion with their provider and an informational brochure.

- Quasi experimental example: A time series design, to explore the impact of a new sexual education course within a school district on teen pregnancy. Data on teen pregnancy rates were collected for five years prior and five years after program implementation to see if rates changed post-program initiation.

In addition to being focused on formative, process or outcome, evaluations can be…

- Prospective - designed before the program has been implemented or

- Retrospective - designed and conducted after a program has been implemented

Thinking of these two timing options, prospective or retrospective, which do you think would be most valuable and why?

A Framework for Program Evaluation

As we walk through this learning module, we need to remember that evaluations do not exist in a vacuum, but are awash in a sea of context. By their very nature, they must take into account real-world constraints such as time, money, human resources and politics.

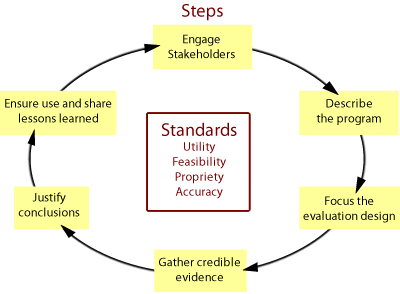

To help, the CDC has outlined six steps as a framework for program evaluations, and identified standards to keep in mind throughout the evaluation process. We provide their schematic and detail the steps and standards below. We will use the six steps as we walk through this module.

- Engage Stakeholders: An accurate, valid evaluation is a major goal for evaluators, but ultimately the strongest evaluation is one that is used to beget action. You want the results to be relevant and utilized to improve future programming. In order to do so, a critical step is consider the evaluation audience (or stakeholders). Identify those who are invested in the evaluation questionsand results and bringing them to the table as evaluation resources and allies.

- Describe the program: Elucidate and explore the program's theory of cause and effect, outline and agree upon program objectives, and create focused and measurable evaluation questions

- Focus the evaluation design: Considering your questions and available resources (money, staffing, time, data options) decide on a design for your evaluation. In this step you should weigh the different design options to understand the advantages and limitations (threats to internal and external validity in particular) of your design options

- Gather credible evidence: Data collection is essential to support evaluation conclusions and recommendations. What you collect and how it is collected impact views on quality and credibility of the evaluation findings.

- Justify conclusions: Use the evidence and subsequent data analyses to help answer the main research questions and create conclusions and recommendations based on the findings.

- Ensure use and share lessons learned: The primary goal of an evaluation should be to be useful and this requires communicating and sharing your results. Stakeholders should be made aware of the study process, findings, and efforts should be made to ensure results are incorporated into program decisions.

The CDC's framework should be applied based on a set of standards that have been developed to help guide your choices at each step of the framework. These standards include utility, feasibility, propriety, and accuracy.

Now that you have a brief overview of program evaluation steps and goals, let's try to apply some of these ideas…

Scenario: Helments While Biking

Let's image the Brookline Public Schools have decided to implement a program for their students, aimed at reminding middle and high school students of the importance of wearing helmets while biking. Below is a brief description of the program.

Purpose: To increase usage of bike helmets among 14-18 year olds in Brookline and ultimately reduce the number of traumatic head injuries. Helmets were distributed at middle and high schools and then a campaign was created to educate on the use and benefits of helmets when riding your bicycle—with celebrity speakers brought into classrooms, pamphlets sent home with students, and a broad media campaign in the neighborhood.

Try and match the program questions listed below to their corresponding type of evaluation: Formative, Process, or Outcome, respectively.

Program Questions

- What percent of parents report the pamphlets being sent home with their child?

- What is the incidence of brain injury from bike accidents among 14-18 year olds prior to and the summer post-program implementation?

- Assess the strenths/areas for improvement in an initial pilot program

- Were 14-18 year olds satisfied with their helmets?

- What was the percent increase in helmet usage among 14-18 year olds in Brookline?

- What percent of students are receiving in-class instruction on helmet use?

- What were the number of helmets distributed to each of the schools?

Next, let's apply some of the ideas we have discussed to the Health Bucks Case Study.

The goals of Health Bucks include the following:

- Assess program impact on access, purchasing, and consumption of fresh fruits and vegetables among low-income residents eligible for Health Bucks

- Identify drivers and barriers surrounding program implementation and outcomes, particularly how they may have varied by site/location.

- Explore potential areas for improvement

- Assess if and how this program could be expanded to other communities facing similar challenge

Case Study Reflection

Prior to proceeding to the next page, you may wish to jot down responses to the following items. Note that there will be several case study reflections throughout the module.

- List some of the questions you think evaluators and stakeholders should explore via the Health Bucks evaluation.

- Which evaluations (Formative, Process, or Outcome) appear most relevant to the situation of Health Bucks? Explain why.