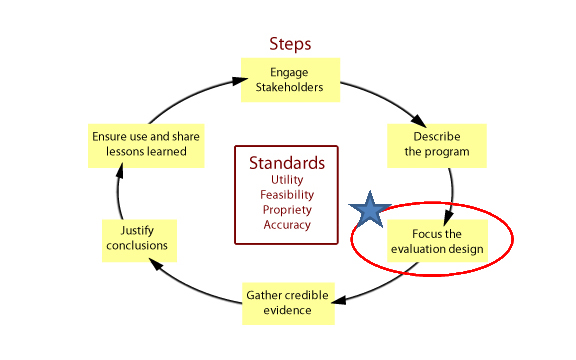

Focus on Evaluation Design

After you have a detailed program description, this information can be used to build a list of possible evaluation questions, choose those that are most important to be tested and build an appropriate evaluation design to answer your research questions.

Evaluation Scope

Here is where you make decisions regarding how best to focus your evaluation.

If your program description has highlighted...

- ...the program is new and will not have met even its intermediate objectives, then you will want to focus the evaluation on the implementation and processes. This will allow you to understand whether the program is being run as intended and fitting within the theory of cause and effect.

- ...the program is well established, then perhaps your evaluation can focus on more of the longer-term outcomes and ultimate impact of the program. Particularly if the program has undergone prior evaluation for process or short/intermediate outcomes.

If you have built a detailed logic model for the program, this can be a helpful tool to assist in determining where to best focus your evaluation.

So, how do you decide how to focus your evaluation and which type of evaluation to conduct? It will definitely be a case-by-case decision, but the CDC's Introduction to Program Evaluation for Public Health Programs has laid out some helpful criteria to consider:

Ask yourself the following questions.

- What is the purpose of the evaluation?

- Who will use the evaluation results?

- How will they use the evaluation results?

- What do other key stakeholders need from the evaluation?

- What is the stage of development of the program?

- How intensive is the program?

- What are relevant resource and logistical considerations?

Case Study Reflection

Consider once again our Health Bucks case study.

Based off the program information and background provided thus far, what type of evaluation do you think would be most pertinent at this stage? And why?

Define Evaluation Questions

Revisiting the specific program objectives can be very helpful when building your evaluation questions. With these and your previously determined focus criteria you can now create specific questions focused on aspects of the program's impact and implementation.

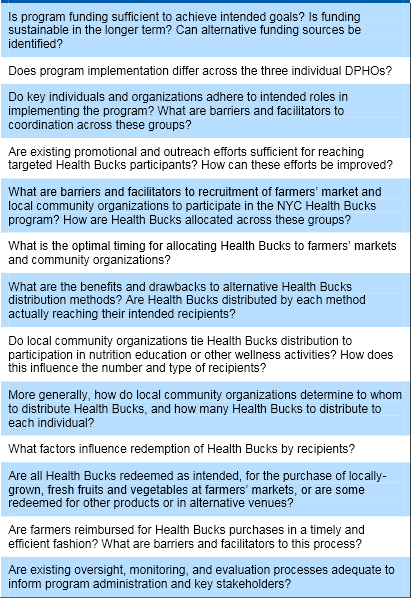

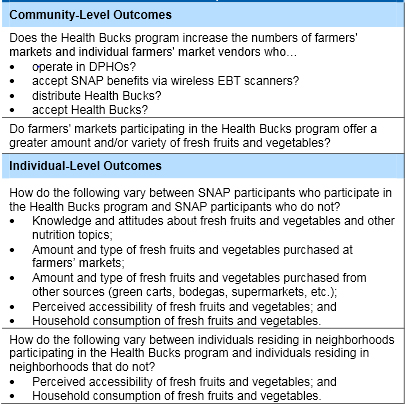

Below is a sampling of questions evaluators used for Health Bucks.

Process Evaluation Questions

Process Evaluation Questions

Forming evaluation questions may be seen as a tedious or unimportant step, but they are in fact critical to evaluation success. Keep in mind several important aspects of your questions as you form, iterate, and finalize:

- Your questions should reflect the objectives and/or theory of implementation of your program

- The more specific you can make your questions the better

- Use active language

- Questions should not only be clear, but measurable

Practice writing evaluation questions

Use the following case to practice writing evaluation questions.

Program Description for Online weight management

Individuals are screened by their primary care physician. Any individual with a BMI of over 30 is offered the opportunity to participate in a clinic-sponsored weight loss program.

The program entails 15 hours of online-counseling with a dietician over 3 months and 15 hours of counseling with a physical trainer. In addition to drawing up a healthy eating and activity program that meets their personal goals and needs, participants are connected via this online portal to a support community for a 6 month period. They are each assigned to a coach who has previously lost weight themselves and who has completed a week-long training regarding their role. Coaches are there to offer encouragement, support and guidance.

As part of the program, participants are required to weigh in 3 times over the weight loss period---at the beginning, at 3 months, and at 6 months. At the end of the three-month period, the goal is that individuals will be following their weight loss and diet plans, and have lost at least 10 pounds.

By the end of the 6-month period, the goal is for patients to have lost and sustained a 15 pound or more total weight loss.

Below are a series of questions surrounding the program described above. Please mark as True those outcome evaluation questions you think the team should move forward with in their evaluation. Mark as False those that are weak and should be modifed.

Case Study Reflection

Now, take some time to build 4 additional potential questions that could be explored during the online weight loss program evaluation.

Feel free to come up with entirely new questions or re-work some of the weak questions above.

Study Design

Let's return for a moment to the three different types of evaluations that were outlined at the beginning of this model. You may decide based on your questions that one "type" or a combination of the types fits best with your research goals.

Types of Program Evaluation

Formative: To "test" various aspects of the program. Does it make sense and is it applicable? Typically takes place prior to program implementation or early on in the process

- The goal is to provide feedback on strengths and areas of improvement for the program, or program materials, as well as explore overall applicability and feasibility of the project.

Process: Explore and describe actual program implementation. It can indicate whether the program was implemented with fidelity, as intended. It may help explain why a program did or did not meet its main objectives.

- The goal is to examine how the program was implemented

Outcome/Impact: Assess the main program objective (or objectives) to determine how the program actually performs. Was the program effective, did it meet the objective(s)?

- The goal is to determine whether outcomes observed are due to the program

Once you have decided on your questions and evaluation type, it is time to develop your study design.

For the purpose of this module, we will focus mainly on outcome evaluation and the corresponding designs. Please note that some of these may still be applicable to a process or formative evaluation as well, or that you could include some amount of formative and/or process evaluation with your outcome design.

On a high level, there are three different types of research designs used in outcome evaluations:

- Experimental designs

- Quasi-experimental designs

- Observational designs

The study design should take into consideration your research questions as well as your resources (time, money, data sources, etc.). You should also consider the pros and cons of the various research designs, paying particular attention to the level of scrutiny you requires as well as the internal and external threats to validity with each design.

Internal Validity

The ability of the study design to allow accurate casual inferences regarding program outcome(s) is internal validity. The impact of your design choice on internal validity should always be considered. Researchers suggest five requirements in order to prove internal validity of your design (Blalock 1964; Cook and Cambell 1979; Grembowski 2001):

- "A theoretical, conceptual, or practical basis for the expected relationship exists, as defined by the program's theory

- The program precedes the outcome in time

- Other explanations are ruled out

- A statistically significant association exists between the program and the outcome (the outcome is not due to chance)

- Outcome measures are reliable and valid"

External Validity

Whether the results seen in the program evaluation can be generalized to other settings.

This often comes into play after the evaluation is completed and the data are interpreted. To understand, not only how the program impacted the target population, but whether the program could be applicable outside of its current setting.