Malaria

Authors:

Maya Guttman-Slater

Oluwadolapo Lawal

Maura Soucy

Jennifer Sun

Introduction

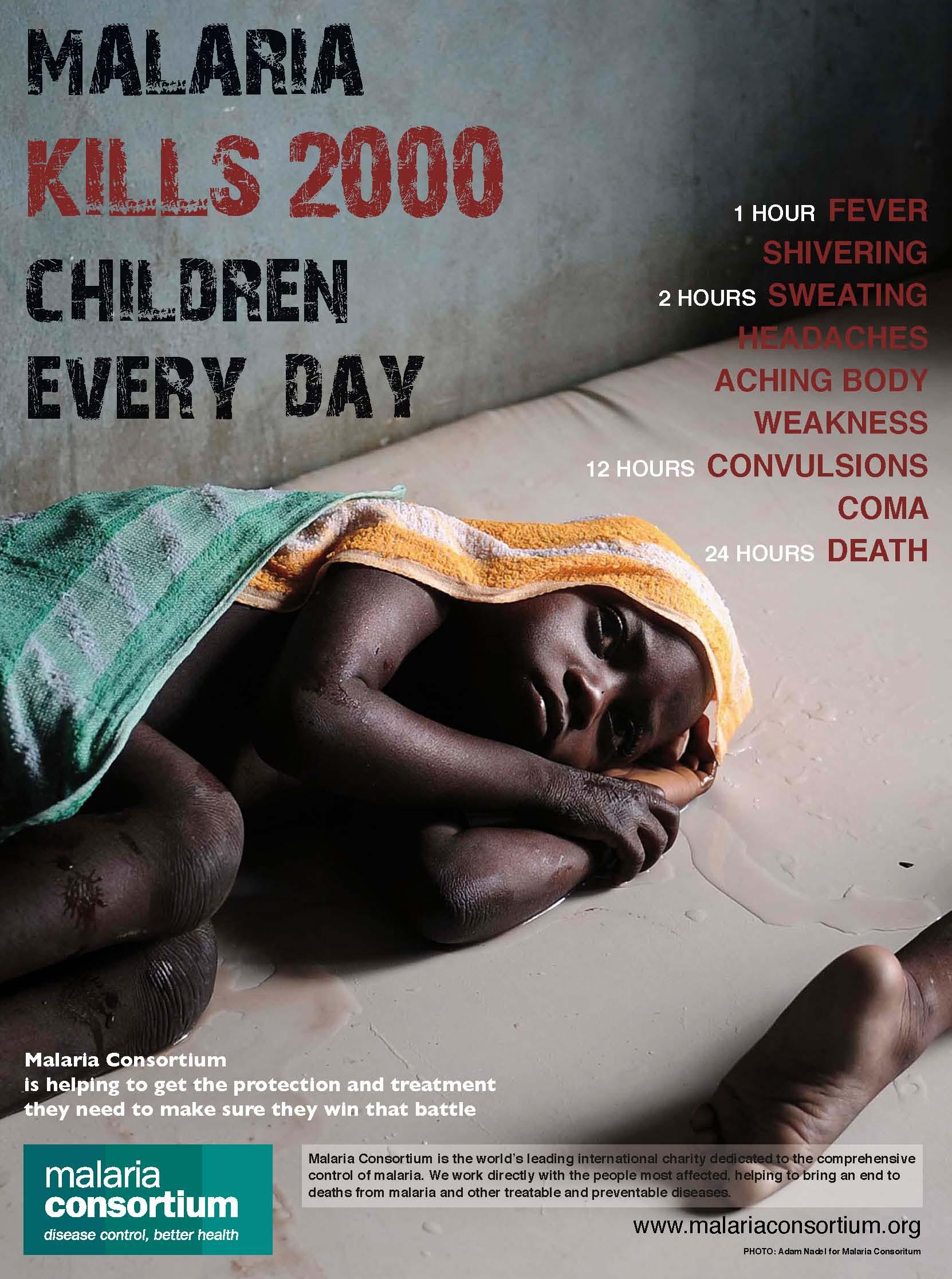

|

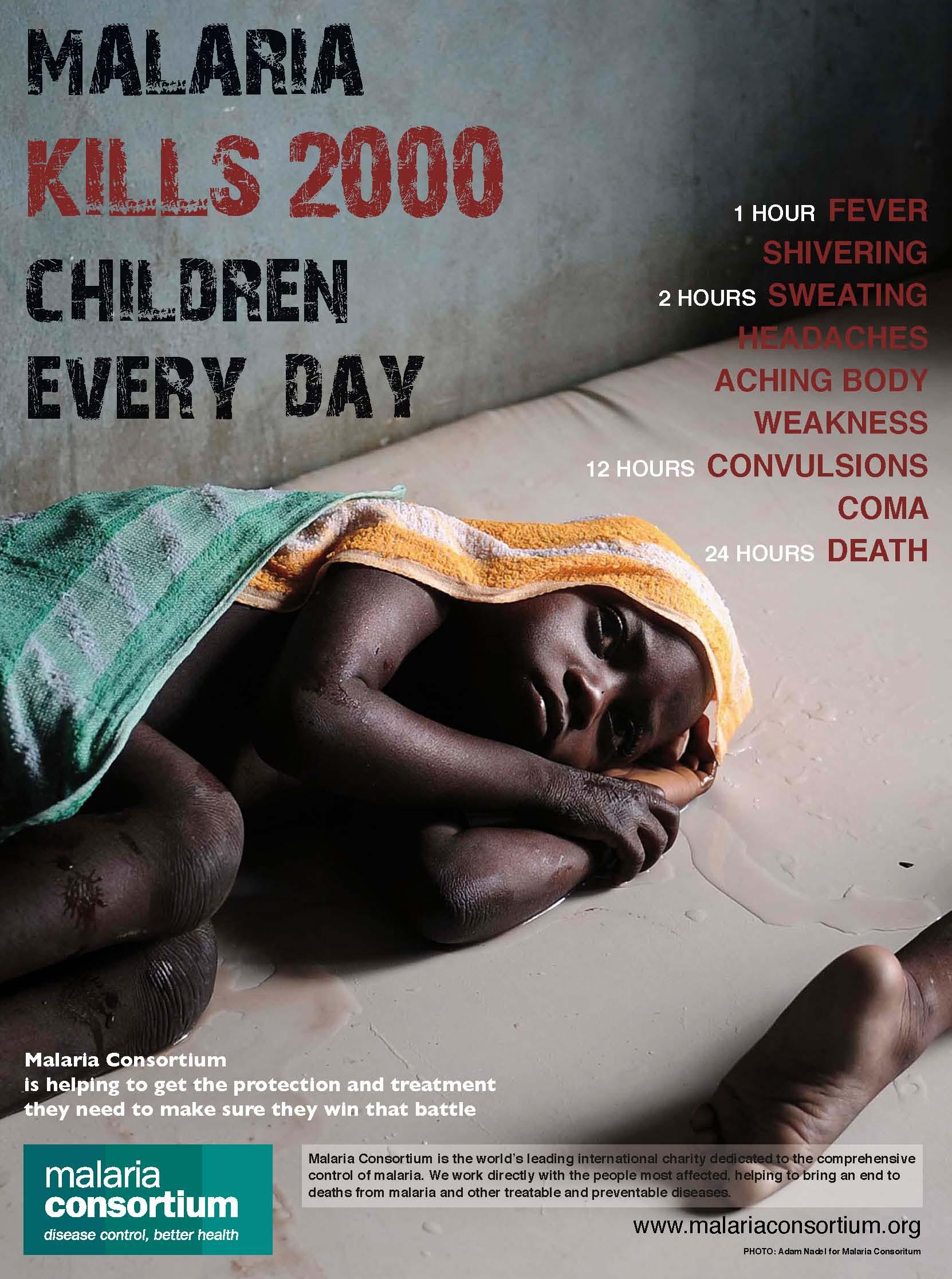

Malaria is one of the most common diseases in the world - it is also one of the most deadly. According to the WHO, approximately half of the global population is at risk for malaria infection. Once infected, symptoms can be serious and, in about 20% of cases, are fatal. Approximately, 660,000 deaths are caused by malaria each year, about 91% of these occur in sub-Saharan Africa. More than 85%, of these deaths occur in children.

Malaria is spread through the bite of infected mosquitoes, which carry the Plasmodium parasite. The disease is highly transmissible, and is spread by five different species of Plasmodium that cause malaria in humans.

This module summarizes the causes of malaria, including a brief description of the life cycle of the Plasmodium parasite and a brief description of symptoms and outcomes in adults and children who have become infected with malaria. We then examine environmental factors that influence the incidence of malaria and measures to control it. It will also summarize issues regarding the development of drug resistance in Plasmodium and pesticide resistance in mosquitoes. The module concludes with an outlook on possibility of eradication and future means of prevention and control.

|

|

Learning Objectives

Geographic Distribution

|

|

The map on the left shows the geographic variation in prevalence of malaria. Areas in red have widespread malaria, while green shading illustrates areas in which malaria does not occur. Yellow shading indicates malaria in some locations.

Several factors contribute to high prevalence in Africa. These include an efficient vector, i.e., the Anopheles mosquito, the high prevalence of Plasmodium falciparum (the parasite that causes the most severe cases of malaria), weather conditions that encourage year-round transmission, and inadequate malarial control and prevention measures due to inadequate resources and socio-economic structures in many African nations. Malaria is also a significant problem in Southeast Asia and parts of Central and South America.

[Map source: CDC]

|

The Vector

|

The female anopheles mosquito is the sole transmitter of the plasmodium parasite, since they require blood meals for egg development, and males do not. Transmission of plasmodium depends on the anopheles mosquito's ability to bite, and therefore inject the plasmodium parasite into a human, as well as its lifespan. The longer a mosquito can live, the more developed the parasite can become before it is transmitted. In many parts of Africa, anopheles mosquitos live longer than elsewhere, due in large part to inadequate mosquito control mechanisms in rural areas, making them more deadly and more likely to pass on a mature parasite in the late stages of development (as a sporozoite). Complete maturity of the parasite at transmission requires an adult mosquito lifespan of at least 10-18 days.

Anopheles mosquitoes are active at night, and tend to bite most between dusk and dawn.

Click here for more information on the Anopheles mosquito.

|

|

Transmission & Immunity

Populations in areas where malaria is highly prevalent may be exposed to the parasite for many years, and as a result, can develop partial immunity. Partial immunity can provide reduced risk for more serious symptoms, however, it cannot provide full protection against the disease. The longer the immune system interacts with the parasite at low levels, the greater the degree of protection, which reduces disease severity upon later infection. As a result, many children are at greater risk for death from malaria as they have not been exposed as long as adults.

Populations at increased risk for severe malaria

(I don't understand the "rationale" comments. They seem overly brief.)

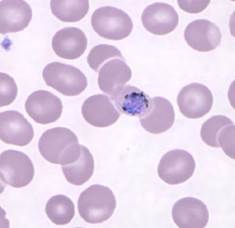

The Parasite

There are five species of the Plasmodium parasite that can cause malaria in humans.

|

Plasmodium species

|

About

|

Gametocyte Phase

|

|

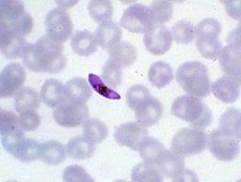

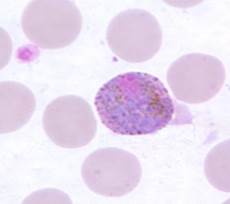

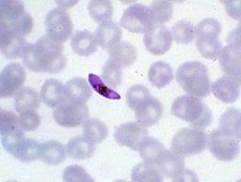

Plasmodium falciparum

|

- One of the most common species with P. vivax

- Most deadly species

- Found throughout Africa

- Unique crescent shaped gametocyte

|

|

|

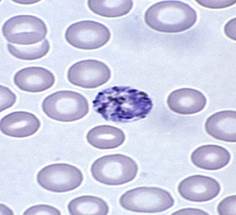

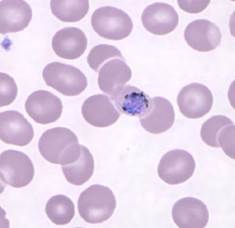

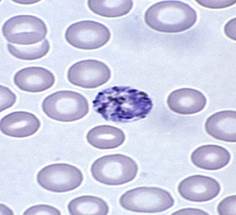

Plasmodium vivax

|

- One of most common species with P. falciparum

- Found mostly in Asia and South America

|

|

|

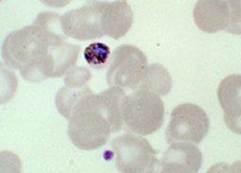

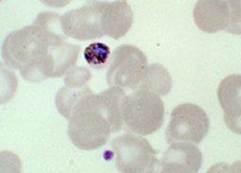

Plasmodium malariae

|

Generally develops into mild clinical cases

- Lower prevalence than P. falciparum and P. vivax

- Found in South America, Asia and Africa

|

|

|

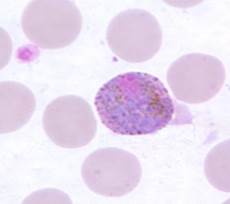

Plasmodium ovale

|

- Second most common strain in Africa (after P. falciparum)

- Biologically and morphologically similar to P. vivax

|

|

|

Plasmodium knowlesi

|

- Previously found only in macaque monkeys

- Found primarily in South-East Asia

- Difficult to diagnose due to the trophozoite stages and erythrocytic stages being almost indistinguishable from P. falciparum and P. malariae, respectively

|

|

This article provides more information on the biological differences between the different plasmodium species

The Malaria Life Cycle

The life cycle of plasmodium is quite complex, involving many different developmental stages of the parasite, both in mosquito vectors and humans. However, the key stages are highlighted in the gold boxes in the figure below. Move your mouse over these to see more information about the key steps.

The two short videos below give nice overviews of the life cycle.

The links below provide additional information about the the biology of malaria.:

- WHO: 10 facts on malaria

- CDC: Biology of Malaria

- WHO: Malaria

Signs and Symptoms of Malaria

Individuals infected with malaria experience symptoms relating to many systems in the body, including the nervous, circulatory, muscular, and respiratory systems. The magnitude of symptoms varies from uncomplicated to severe, and children are more likely to develop severe infections.

Uncomplicated malaria generally has symptoms which last 6-10 hours and involves three stages related to changes in body temperature:

- A cold stage (including shivering)

- A hot stage (including fever)

- Fever breaking (sweats and return to normal temperature)

Additional symptoms of uncomplicated malaria include nausea, headaches, vomiting, body aches, increased respiratory rate, and general malaise. The infection can also produce enlargement of the liver and increased respiratory rate. Signs of malaria may include mild jaundice and enlargement of the liver or spleen. Left untreated, uncomplicated malaria can progress to severe malaria in as quickly as 24 hours.

Severe malaria refers to cases that progress to hemolysis, which can result in severe anemia, hyperparasitemia, abnormal blood coagulation, acute kidney failure, hypoglycemia, and metabolic acidosis. The nervous system can also be affected resulting in cerebral malaria. Symptoms may include abnormal behavior, impairment of consciousness, seizures, coma or other neurologic abnormalities.

Historical Control of Malaria

Malaria has afflicted people for much of human history. The prevention and treatment of the disease has been investigated in science and medicine for hundreds of years. These studies have continued up to the present day and eradication/elimination of malaria still seems a distant goal. As malaria remains a major public health problem, causing 250 million cases of fever and approximately one million deaths annually, understanding its history is key.

Timeline of Discoveries and Disease Control Methods

DDT - Attempts at Eradication Post-WWII

After the Second World War the international community turned its attention to the health problems of peacetime. Malaria was rampant throughout much of the developing world, and in the 1950s the World Health Organization (WHO) considered a programme for eradicating malaria similar to that used to eradicate smallpox. The auguries were good: chloroquine was highly effective against the Plasmodium parasite in humans, the new insecticide dichloro-diphenyltrichloroethane (DDT) killed the vector (Anopheles) and draining marshes removed the mosquitoes' breeding grounds.

A mathematical model was developed by MacDonald to explain the dynamics of malaria and how malaria could be eradicated. Four strategies were formulated using this model:

- Preparation - Gather epidemiologic and entomologic information to plan attack

- Attack - Massive IRS spraying of every at risk house with DDT until the number of infective carriers was at or near zero

- Consolidation - Active case finding and treatment with chloroquine/quinine

- Maintenance - Continue until global eradication achieved

Initially, spraying houses with DDT was a success: the number of cases of malaria in Sri Lanka, for instance, fell from 3 million in 1946 to only 29 in 1964. Also, Australia, Brunei, Bulgaria, Cuba, Dominica, Grenada, Hungary, Italy, Jamaica, Mauritius, Netherlands, Poland, Portugal, Puerto Rico, Romania, Saint Lucia, Singapore, Spain, Taiwan, Trinidad and Tobago, United States, Virgin Islands and Yugoslavia were able to successfully eradicate malaria. However, complications of this policy soon became apparent when resistance to chloroquine and environmental toxicity of DDT were reported.

In 1962, Silent Spring by American biologist Rachel Carson was published. The book catalogued the environmental impacts of the indiscriminate spraying of DDT in the US and questioned the logic of releasing large amounts of chemicals into the environment without fully understanding their effects on ecology or human health. The book suggested that DDT and other pesticides may cause cancer and that their agricultural use was a threat to wildlife, particularly birds. This resulted in a large public outcry that eventually led to DDT being banned for agricultural use in the US in 1972. DDT was subsequently banned for agricultural use worldwide under the Stockholm Convention, but its limited use in disease vector control continues to this day and remains controversial.

Click here for the Stockholm Convention guidelines on DDT use

Furthermore, draining all the marshes in tropical parts of the world proved impossible, and chloroquine showed signs of decreasing efficacy. Resistance to chloroquine had been acquired by Plasmodium and gradually, during the 1970s, regions of chloroquine resistance spread throughout the world. Today, there are resistance strains of Plasmodium in many areas. Resistance arose probably due to poor compliance, a major problem in bringing complex and expensive drug treatments to countries with little health infrastructure.

Click here for the WHO position on DDT use.

Emergence of Drug Resistance

The WHO defines antimalarial drug resistance as "the ability of a parasite strain to survive and/or multiply despite the proper administration and absorption of an antimalarial drug in the dose normally recommended." Resistance develops in two phases: the initial genetic event where resistance mutations occur; and the ensuing process where the survival advantage developed by the mutants in the presence of the drug results in their preferential transmission, thus spreading resistance. In the absence of antimalarial medicines, the mutants could potentially be at a survival disadvantage. Microbial resistance occurs as a result of random mutations that interfere with a given drug's mechanism of action. For example, if a drug's action depends on binding to a specific protein in the infectious agent, a random mutation might result in a slight alteration in the shape of the protein such that binding can no longer occur. Another drug might inhibit DNA replication within the parasite. Random mutations that render an infectious agent resistant to a particular drug are rare, but they are virtually inevitable given a large enough number of DNA replications in a parasitic species.

Resistance to antimalarial drugs is a major threat to malaria control and has been documented in all classes of antimalarials, including the artemisinin derivatives. P. falciparum resistance to chloroquine first emerged in the late 1950's in South East Asia and spread to other areas in Asia and then to Africa in the following three decades. Resistance to sulfadoxine-pyrimethamine originated in the same area of South East Asia and spread rapidly to Africa. In the Amazon region of South America, resistance to chloroquine and sulfadoxine-pyrimethamine emerged independently and spread throughout the continent. After widespread use, resistance to mefloquine appeared in the Mekong region of Asia within five years of its introduction in the 1990s. Although resistance has forced most malaria endemic countries to abandon chloroquine in P. falciparum treatment, chloroquine remains the first-line treatment for P. vivax. However, this treatment is being threatened by the emergence and spread of chloroquine resistant strains of that parasite.

Watch a video showing the spread of resistance and an interesting DNA tracking project

Virulence factors are those factors that increase the damaging effects of an infective organism. They serve to make the organism more infectious, increase its ability to invade, increase its evasiveness to immune response, or worsen the severity of disease. Drug resistance is a special kind of virulence factor that enables the pathogen to survive in the face of normally effective chemotherapy. Organisms typically have several virulence factors expressed at one time, since the "normal" pathogen employs several to invade and establish infection and disease. Drug resistance usually emerges only in the presence of widespread use of a treatment regime, and typically decreases after cessation of this type of treatment.

Drug resistance is typically considered to exact a "biological cost" or a "fitness cost" of an organism. In other words, a mutation that confers the benefit of drug resistance may also have some adverse effects on the pathogen, such as slower growth. As a result, resistance to a given drug may provide a survival advantage only in the continued presence of the drug, i.e., continued presence of a selection pressure that favors drug resistant strains. It has been shown repeatedly that, once certain drugs are discontinued, prevalence of resistance to those drugs also decreases (Stein et al), because discontinuation of the drug removes the selcetion pressure that favored that strain, and the other biological effects of the mutation then put that strain at a competitive disadvantage.

It is widely believed that drug resistance only develops in the presence of inadequate chemotherapy regimes, for example administration of sub-optimal doses that do not eliminate infection but instead select for resistant strains to thrive. Recent work by Schneider et al. suggests an alternate pathway for drug resistance to develop, and these conclusions have far reaching implications for both the development of drug resistance and our ability to combat it. Schneider infected mice with 2 genetically related strains of P. chabaudi, one virulent and one avirulent, and discovered that the virulent strain had a high level of drug resistance to pyrimethamine. The virulent strain had not developed resistance in an environment of low dose administration of the drug, and resistance developed in conjunction with other virulence factors. In essence, the resistance mechanism conferred fitness, rather than exacting a fitness cost on the organism.

It is widely believed that drug resistance only develops in the presence of inadequate chemotherapy regimes, for example administration of sub-optimal doses that do not eliminate infection but instead select for resistant strains to thrive. Recent work by Schneider et al. suggests an alternate pathway for drug resistance to develop, and these conclusions have far reaching implications for both the development of drug resistance and our ability to combat it. Schneider infected mice with 2 genetically related strains of P. chabaudi, one virulent and one avirulent, and discovered that the virulent strain had a high level of drug resistance to pyrimethamine. The virulent strain had not developed resistance in an environment of low dose administration of the drug, and resistance developed in conjunction with other virulence factors. In essence, the resistance mechanism conferred fitness, rather than exacting a fitness cost on the organism.

Specifically, this case of resistance arose due to the action of pyrimethamine on the organism. It just so happens that the virulent strain had an altered folate biosynthesis pathway, which gave the advantage of faster multiplication. The altered metabolism of the organism makes this change self-sustaining, and does not require any selective pressure to maintain its biological advantage. This change also alters the way the organism interacts with pyrimethamine, making it less susceptible to the drug almost as a side effect of this alteration.

This marks the first known time that drug resistance has developed independently of drug administration, and has conferred additional advantages that allow such strains to thrive, even in the absence of selective pressure from drug therapy. While the scientific community is cautiously regarding this instance as a singular event, due to the nature of this specific pathway and its role in both drug interaction and metabolism.

Current Practices and Environmental Factors

No single strategy will control, eliminate and eventually eradicate malaria; rather, a multi-pronged, sustainable approach must be taken that fuses vector and parasite control, treatment, vaccination, monitoring and surveillance. Malaria vector control aims to reduce vector capacity to below the necessary threshold to maintain the malaria infection rate by draining breeding sites, using insecticides and preventing human contact through screens and bed nets. The primary interventions have been the utilization of long-lasting insecticide-treated nets (LLINs/ITNs), indoor residual spraying of long-lasting insecticides (IRS), larvicides that target mosquitoes' larval life stage, biological agents such as larvivorous fish and fungi, and environmental management like draining bodies of still water that are mosquitoes' breeding grounds. Please see figure below for advantages of these interventions.

Vector Control, Transmission Blocking, Elimination and Eradication Strategies

Intervention Strategies [Adapted from Birkholtz et al. Malaria Journal 2012, 11:431

Malaria is a complex problem, and environmental factors make control and eventual eradication much more challenging. High-income Western countries with minimal terrain conducive to mosquito breeding were able to drain swampy breeding grounds and utilize DDT indiscriminately, which eventually led to the eradication of malaria in their region. In contrast, poor developing nations where malaria is still endemic face many environmental challenges to eradication. The vast tropical landscape of these endemic regions make draining and spraying difficult. Moreover, the controversy surrounding DDT and the rise in resistance to insecticides forces modern health professionals in these developing regions to limit insecticide use and look towards alternative and innovative interventions.

Click here for a map and article on insecticide resistance

Previous World Health Organization (WHO) guidelines recommended that all fevers in malarial regions be treated presumptively with antimalarial drugs. However, declining malarial transmission in parts of sub-Saharan Africa and Asia, declining proportions of fevers due to malaria, the availability of rapid diagnostic tests and the emergence of drug-resistant malaria has led to a review and change in global practices.

Current Antimalarial Medicines

Antimalarials attack the parasite by various mechanisms and have a long history of use, dating back to the 17th century with quinine. Until recently, the derivative chloroquine was the most widely used antimalarial for prevention and treatment. Introductions in the latter years of the 20th century included sulfadoxine-pyrimethamine, amodiaquine, mefloquine and atovaquone. One of the most important antimalarial drugs today, artemisinin, is extracted from the leaves of Artemisia annua (sweet wormwood) and has been used in China for the treatment of fever for over a thousand years. It is potent and rapidly active against all Plasmodium species. In P. falciparum malaria, artemisinin also kills the gametocytes - including the stage 4 gametocytes, which are otherwise sensitive only to primaquine. Artemisinin and its derivatives inhibit an essential calcium adenosine triphosphatase, PfATPase 6, and have now largely given way to the more potent dihydroartemisinin and its derivatives, artemether, artemotil and artesunate. The three latter derivatives are converted back in vivo to dihydroartemisinin. These are critical antimalarials and should be given as combination therapy to protect them from resistance (WHO Malaria Treatment Guidelines, 2010).

The Importance of Combination Therapy

Widespread and indiscriminate use of antimalarials exerts a strong selective pressure on malaria parasites to develop high levels of resistance. Resistance can be prevented, or its onset slowed considerably, by combining antimalarials with different mechanisms of action and ensuring very high cure rates through full adherence to correct dose regimens (WHO Malaria Treatment Guidelines, 2010). As a result of the spread of resistance of monotherapy treatments, the WHO adopted a policy in 2001 that recommends combination therapy (treatment using two unrelated drugs) and advises one of the drugs should be an artemisinin derivative. This has led to the increased use of Artemisinin-based Combination Therapies (ACTs) as the global frontline treatment, and appears to be potent and effective in malaria endemic regions (Baird, J Kevin, Effectiveness of Antimalarial Drugs. The New England Journal of Medicine. 2013; 352:15).

Combination therapy reduces the likelihood of having resistant species emerge by simply reducing the probability that a resistant mutant will survive. For example, if the probability of a random mutation that renders drug A ineffective is one in a million, and the probability of a separate mutation that provides resistance to drug B is also one in a million, then the probability of having random mutations that provide resistance to both drugs is one in 1,000,000,000,000. As a result, an organism that had a mutation providing resistance to one of the drugs would be unable to propagate, because it would most likely be killed by the other drug.

Novel Intervention Strategies

Challenges

Two major types of challenge for the development of new medicines against malaria remain: difficulties in the nature of the disease (including virulence factors, epidemiology, and environment) and difficulties centering around drug discovery and the pharmaceutical industry. Emergence and spread of resistance are always major concerns in infectious disease, and recent reports in the literature confirm decreased patient responses to artemisinin derivatives in South-East Asia combined with decreasing efficacy of the partner drugs used in artemisinin combination therapy (ACT). Replacements for artemisinin-based endoperoxides and combination partners are urgently required. Ideally at least one component needs to be as fast-acting as the artemisinin derivatives to provide rapid relief of symptoms, and as affordable as chloroquine was when it was used as first-line treatment. Modeling studies underline the key role that medicines can play in malaria eradication. Medicines can be used both to treat patients' symptoms and cure them of acute disease, as well as prophylaxis or chemoprotection, and these can play a complementary role alongside a partially effective vaccine. In addition, there are indications that the mosquito vector ( Anopheles ) is developing behavioral strategies to evade insecticide treated nets, and resistance to the pyrethroid class of insecticides used in the nets is increasing. The cost of failure in malaria control is high: the historical experience with chloroquine and DDT resistance shows that the loss of frontline interventions can have a devastating effect if a new generation of therapies and other interventions are not available.

The goal of long-term malaria eradication brought to light several issues relating to drug discovery for novel antimalarial therapies. First, the new medicines need to be able to reduce and, ideally, prevent transmission. Additionally, they need to safely prevent disease relapses with Plasmodium vivax and Plasmodium ovale, which can occur when dormant infections re-emerge, even after leaving malaria endemic sites. Significant post-treatment prophylaxis (treatment of a malaria case providing protection against future infection) may help to reduce the clinical burden of malaria especially in high-transmission areas, but currently available drugs are too toxic and/or expensive for this to be possible at this time. Lastly, new medicines will be needed for chemoprotection (causal or chemoprophylaxis) for vulnerable populations such as infants and expectant mothers. All of these medicines must be safe enough for use in sensitive patient groups, including pregnant women, the youngest of children and patients with other co-morbidities, such as HIV and TB infection, or malnutrition, and correct doses must be selected for each group. It is important to emphasize that due to the combination of these constraints there is unlikely to be a one-size-fits-all solution in the campaign to eliminate malaria; and many of these issues cannot be tested or addressed until Phase IV, after extensive and expensive trials have already occurred.

Malaria Vaccine

Malaria vaccines are considered amongst the most important modalities for potential prevention of malaria disease and reduction of malaria transmission. Research and development in this field has been an area of intense effort by many groups over the last few decades. Despite this, there is currently no licensed malaria vaccine. The complexity of the malaria parasite makes development of a malaria vaccine a very difficult task. Given this, there is currently no commercially available malaria vaccine, despite many decades of intense research and development effort. Over 20 subunit vaccine constructs are currently being evaluated in clinical trials or are in advanced preclinical development.

There are a number of ongoing attempts to develop an effective vaccine for malaria, but at this writing, RTS,S is the most promising and is currently being evaluated in phase 3 clinical trials in Africa. This vaccine was developed through a partnership between GlaxoSmithKline Biologicals, the PATH Malaria Vaccine Initiative (MVI) and the Bill & Melinda Gates Foundation. Phase 2 trials suggested about a 50% reduction of malaria in children. If the phase 3 trials confirm the safety and efficay of the vaccine, it could be widely available in 5-10 years. Unformtunately, RTS,S provides protection against only Plasmodium falciparum alone, with no protection against P. vivax malaria. Phase 2 trials also suggested that the effectiveness waned over time.

Genetically Engineered Mosquitoes

Current insecticide-based vector control strategies such as insecticide-impregnated bed nets, as well as other population-suppression strategies. [e.g., sterile-insect technique (SIT) and or RIDL (release of insects with dominant lethality) have the drawback of creating an empty ecological niche. Since the environment in which mosquitoes thrive remains unchanged, the mosquito population will return to its original density when the intervention ends or when mosquitoes become resistant to the insecticide. As a result, any population-suppression strategy would need to be implemented indefinitely. An alternative approach would be to hinder the ability of the mosquito to support parasite development in order to reduce or eliminate transmission. For example, it might be possible to genetically modify the mosquito in a way that enable secretion into its midgut of gene products that inhibit parasite development.

Transgenesis and Paratransgenesis

Transgenesis and paratransgenesis are two novel promising means for interfering with Plasmodium development or infection of the vector mosquito through delivery of anti-Plasmodium effector molecules within the mosquito. Both are population-replacement strategies that, once implemented, should require much less follow-up effort than population-suppression strategies. Rather than reducing mosquito populations and creating an empty niche, these strategies work to render the current population of mosquitoes unable to harbor and transmit the malaria parasite.

Mosquito transgenesis has the advantage of having no off-target effects because transgene expression is restricted to the engineered mosquito. The anti-Plasmodium effector genes can be engineered to express in specific tissues (midgut, fat body, and salivary glands), only in females, and in a blood-induced manner.

Paratransgenesis refers to an alternative approach for delivery of effector molecules via the genetic modification of mosquito symbionts. Advantages of paratransgenesis are the simplicity of genetic modification of bacteria, the ease of growing the genetically modified bacteria in large scale, the fact that it bypasses genetic barriers of reproductively isolated mosquito populations, and effectiveness does not appear to be influenced by mosquito species.

Concerns with Genetic Modification

A major challenge is the development of effective means to introduce engineered bacteria into field mosquito populations. This may be accomplished by placing around villages, bating stations (cotton balls soaked with sugar and bacteria placed in clay jar refuges) using engineered symbiotic bacteria that are vertically and horizontally transmitted among mosquito populations. However, no experimental evidence for the effectiveness of such an approach is presently available. Moreover, for future use in the field, the effector genes need to be integrated into the bacterial genome to avoid gene loss and also to minimize the risk of horizontal gene transfer.

Although many technical aspects have been successfully addressed, several major issues need to be resolved before transgenesis and paratransgenesis can be implemented in the field. Resolution of regulatory, ethical, and public acceptance issues relating to the release of GM organisms in nature must be addressed before these interventions can move forward. Although the subject of genetically modified organisms is controversial, its resolution will ultimately rely on weighing risks against benefits. As these issues are considered, the benefit of saving lives should provide a strong argument in its favor.

Transgenesis or paratransgenesis is not a cure-all solution for malaria control. Rather, both are envisioned as a complement to existing and future control measures. In this regard, transgenesis and paratransgenesis are compatible with each other (possibly additive) and with insecticides and population suppression approaches. Moreover, the diversity of effector proteins make both approaches not unique to malaria but might also be extended to the control of other major mosquito-borne diseases, such as dengue and yellow fever.

Outlook for Eradication

Global eradication of malaria is technically possible, but currently not feasible. Since malaria has a limited natural primate reservoir and the parasite-vector lifecycle is complex and vulnerable, multi-pronged intervention strategies could, in theory, eradicate the disease. Eradication is a long way off, and would require stronger tools for detection, prevention, and treatment than are currently available. An eradication strategy must begin with an inclusive dialogue that elucidates a clear global strategy and connects researchers, information, and funding to promote a unified approach. Eradication also requires better vector and parasite control methods, since current practices either promote resistance or leave populations vulnerable to re-emergence. Novel treatments are needed to combat resistance to antimalarials, and these should ideally be non-toxic and inexpensive enough for mass administration and prophylaxis, where appropriate. Perhaps most importantly, detection and surveillance systems need to be implemented and supported across national borders, to pinpoint and control outbreaks early on, and to maintain vigilance for disease re-emergence and vector encroachment in places where the disease has been eliminated.

Current strategies and those in development, when used in combination, have the potential to reduce the global burden of malaria to lower levels than have ever been possible. For the first time in over 40 years, eradication is no longer being discussed as a fantastical Holy Grail, but as something that is actually possible, if far off.

Below are two provocative talks from TED.com.

- TED Video: Sonia Shah: 3 reasons we still haven't gotten rid of malaria

- TED Video: Bill Gates: Mosquitos, malaria and education

References

- Arguin, Paul M., and Kathrine R. Tan. "Malaria - Chapter 3 - 2014 Yellow Book | Travelers' Health | CDC." N. p., 9 Aug. 2013. Web. 1 Dec. 2013.

- Baird, J Kevin, Effectiveness of Antimalarial Drugs. The New England Journal of Medicine. 2013; 352:15.

- CDC-Centers for Disease Control and Prevention. "CDC - Malaria - About Malaria - Biology - Mosquitoes - Anopheles Mosquitoes." N. p., 8 Feb. 2010. Web. 1 Dec. 2013.

- CDC-Centers for Disease Control and Prevention. "CDC - Malaria - Malaria Worldwide - Impact of Malaria." N. p., n.d. Web. 2 Dec. 2013.

- DPDx - Malaria . N. p., 20 July 2009. Web. 28 Nov. 2013.

- Goh, Xiang T. et al. "Increased Detection of Plasmodium Knowlesi in Sandakan Division, Sabah as Revealed by PlasmoNexTM." Malaria Journal 12.1 (2013): 264. www.malariajournal.com. Web. 28 Nov. 2013.

- Hommel, Marcel. "4.3.2 Plasmodium Vivax." Impact Malaria. N. p., 9 Aug. 2012. Web. 28 Nov. 2013.

- Ketema, Tsige, and Ketema Bacha. "Plasmodium Vivax Associated Severe Malaria Complications Among Children in Some Malaria Endemic Areas of Ethiopia." BMC Public Health 13.1 (2013): 637. www.biomedcentral.com. Web. 29 Nov. 2013.

- Schneider, Petra, et al. "Does the drug sensitivity of malaria parasites depend on their virulence." Malaria Journal 7.1 (2008): 257.

- Sibao Wang, Marcelo Jacobs-Lorena: Genetic approaches to interfere with malaria transmission by vector mosquitoes. Trends in Biotechnology, Volume 31, Issue 3, March 2013, Pages 185-193

- Stein, Wilfred D., Cecilia P. Sanchez, and Michael Lanzer. "Virulence and drug resistance in malaria parasites." Trends in parasitology 25.10 (2009): 441-443

- Westling, J et al. "Plasmodium Falciparum, P. Vivax, and P. Malariae: a Comparison of the Active Site Properties of Plasmepsins Cloned and Expressed from Three Different Species of the Malaria Parasite." Experimental parasitology 87.3 (1997): 185-193. NCBI PubMed. Web. 28 Nov. 2013.

- WHO | Malaria. WHO. N. p., n.d. Web. 27 Nov. 2013.

- WHO Malaria Treatment Guidelines. 2010.

- Wilairatana, Polrat, Noppadon Tangpukdee, and Srivicha Krudsood. "Definition of Hyperparasitemia in Severe Falciparum Malaria Should Be Updated." Asian Pacific Journal of Tropical Biomedicine 3.7 (2013): 586. PubMed Central. Web. 3 Dec. 2013.

It is widely believed that drug resistance only develops in the presence of inadequate chemotherapy regimes, for example administration of sub-optimal doses that do not eliminate infection but instead select for resistant strains to thrive. Recent work by Schneider et al. suggests an alternate pathway for drug resistance to develop, and these conclusions have far reaching implications for both the development of drug resistance and our ability to combat it. Schneider infected mice with 2 genetically related strains of P. chabaudi, one virulent and one avirulent, and discovered that the virulent strain had a high level of drug resistance to pyrimethamine. The virulent strain had not developed resistance in an environment of low dose administration of the drug, and resistance developed in conjunction with other virulence factors. In essence, the resistance mechanism conferred fitness, rather than exacting a fitness cost on the organism.

It is widely believed that drug resistance only develops in the presence of inadequate chemotherapy regimes, for example administration of sub-optimal doses that do not eliminate infection but instead select for resistant strains to thrive. Recent work by Schneider et al. suggests an alternate pathway for drug resistance to develop, and these conclusions have far reaching implications for both the development of drug resistance and our ability to combat it. Schneider infected mice with 2 genetically related strains of P. chabaudi, one virulent and one avirulent, and discovered that the virulent strain had a high level of drug resistance to pyrimethamine. The virulent strain had not developed resistance in an environment of low dose administration of the drug, and resistance developed in conjunction with other virulence factors. In essence, the resistance mechanism conferred fitness, rather than exacting a fitness cost on the organism.